About five years ago, graduate students at the University of Illinois embarked on a project for their ASTRO 596 class. That project has since evolved into a sophisticated artificial intelligence (AI) machine learning software that can identify objects from telescope images, such as stars and galaxies.

The project, known as Detection, Instance Segmentation, and Classification with Deep Learning, or DeepDISC, leverages machine learning—a branch of AI intertwined with computer science. Instead of relying on explicit coding, machine learning allows AI to learn from data and algorithms, much like humans do. The AI then writes its own code and continues to improve and adapt.

More specifically, DeepDISC uses deep learning, a subfield of machine learning that uses neural networks akin to those in the human brain. These networks process data, analyze it, and generate outputs.

Astronomy professor Xin Liu leads the research group Astro-AI, which is at the helm of DeepDISC’s development. Grant Merz, a graduate student, plays a pivotal role in the project as part of his thesis work, contributing to both the development of the codebase and the scientific analysis.

The group collaborates with the Legacy Survey of Space and Time (LSST) Discovery Alliance, a joint initiative between the National Science Foundation and the U.S. Department of Energy, aimed at maximizing the scientific and societal impact of Rubin Observatory’s LSST. Their efforts include integrating DeepDISC with the software used by LSST.

DeepDISC receives funding from various agencies, including the LINCC Frameworks Incubator program, supported by Schmidt Sciences through the LSST Discovery Alliance. Additional support comes from NSF, NASA, a National Center for Supercomputing Applications faculty fellowship, and NCSA’s Students Pushing INnovation internship programs.

Liu and Merz eagerly anticipate the opening of the Vera C. Rubin Observatory, currently under construction in Chile, which is expected to produce “unprecedented” amounts of data.

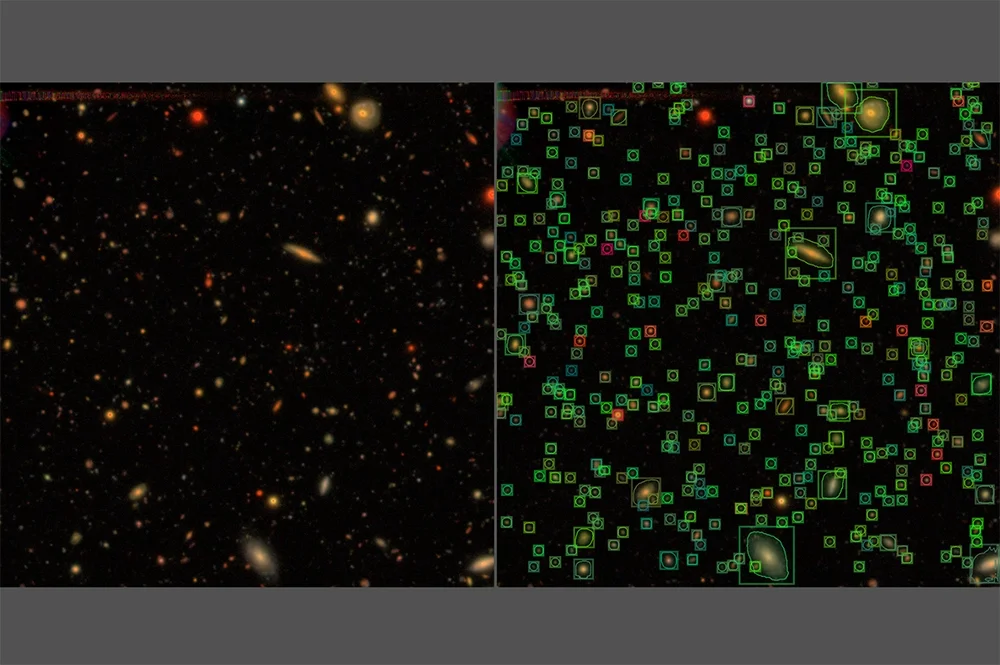

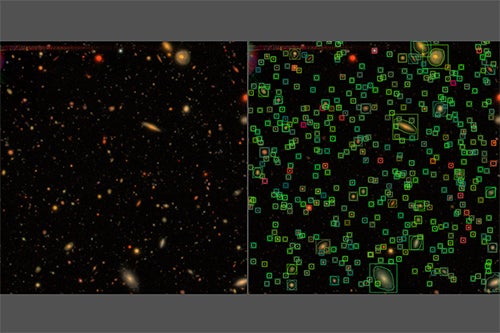

“Photometric redshift, or photo-z, estimation is an important task in survey pipelines for cosmological analyses, as spectroscopic redshift measurements are too costly for large surveys,” reads the project description on LINCC. “Existing frameworks for photo-z estimation include template fitting methods, as well as machine/deep learning models. Image-based models are able to incorporate colors as well as morphology information into predictions. Our method, DeepDISC, uses instance segmentation models to simultaneously detect, deblend, and estimate source redshifts using images as input. It is an efficient method for extracting object features in large cutouts, and can be applied to a variety of image sizes with varying source density.”

Identifying objects involves collecting multiple images of the same patch of sky, which are then combined to create a clearer image, according to Merz. Currently, the program works with preprocessed images, but the researchers plan to integrate real-time processing capabilities in the future.

One of the most challenging aspects of the project, Merz noted, is what he refers to as "training data."

“DeepDISC relies on these AI models that are supervised, which means that to train them, we need some form of pre-labeled information,” Merz explained.

This concept, known as ground truth in machine learning, poses a challenge because the project uses real data, such as the locations of stars. “We don’t know exactly where objects are beforehand,” he said.

Another issue they encounter is deblending, a process in astronomy that involves differentiating and characterizing light sources in images. Most objects in modern telescopes appear blended.

“If you point a highly sensitive telescope at a super bright star, that star often leaves optical artifacts like telescope ghosts and bleeding trails,” he explained. “It’s physically impossible to know for sure how to deblend something. We have to use our best guess to create those training labels.”

In the future, Merz hopes the team will use simulations to achieve full accuracy in deblending images. “With simulations, we know exactly where objects are and what they looked like beforehand,” he said. “So we can, in a sense, perform true deblending that we couldn’t do with real data.”

DeepDISC has been tested on the Hyper-Suprime Cam, a digital camera connected to the Subaru Telescope in Maunakea, Hawaii. "We found that DeepDISC significantly outperforms traditional methods of star-galaxy classification," Merz said.

Liu emphasized that DeepDISC is robust and adaptable to different telescopes beyond the HSC, and it is capable of handling large datasets with high accuracy in galaxy classification.

Liu highlighted Merz’s invaluable contributions to the project.

“His work on the LINCC Frameworks Incubator development and the integration of the photometric redshift module has laid a solid foundation for the project's future expansions,” she said.

Merz mentioned that while there are other AI methods being used for deblending, many are still in their infancy.

“It’s crucial to develop new tools that efficiently handle detection and deblending across various contexts,” Merz said. “We’re excited to apply DeepDISC to new data and simulations as they become available.”

Liu added that the team aims to enhance DeepDISC’s robustness and accuracy further. By making the project open source through GitHub, they enable others to make contributions to its ongoing development. Liu also plans to expand dataset resources and educational outreach to make DeepDISC more accessible.

Beyond astronomy, DeepDISC’s image analysis capabilities may extend to other fields such as medicine and environmental science, Liu suggested.

“By pursuing these expansion plans, DeepDISC aims to remain at the forefront of AI-driven astronomical research, contributing to significant scientific discoveries and advancing the field through cutting-edge technology and collaborative efforts,” Liu said.